Ballerina for AI

AI is no longer just trained — it's integrated.

Ballerina is purpose-built for integration, making it an ideal choice for bringing AI into your applications. With built-in abstractions designed for invoking your favourite large language models and other generative AI technologies, Ballerina simplifies and streamlines AI integration like never before.

Why is Ballerina the way you should write AI applications?

Python remains the go-to for machine learning, data science, and analytics—but integrating AI into modern business applications is a different challenge. It’s now about invoking LLMs, prompt engineering, and embedding AI into workflows. Ballerina, a cloud-native language built for integration, offers powerful abstractions to connect with LLMs and seamlessly weave AI into your applications to deliver real value.

Code

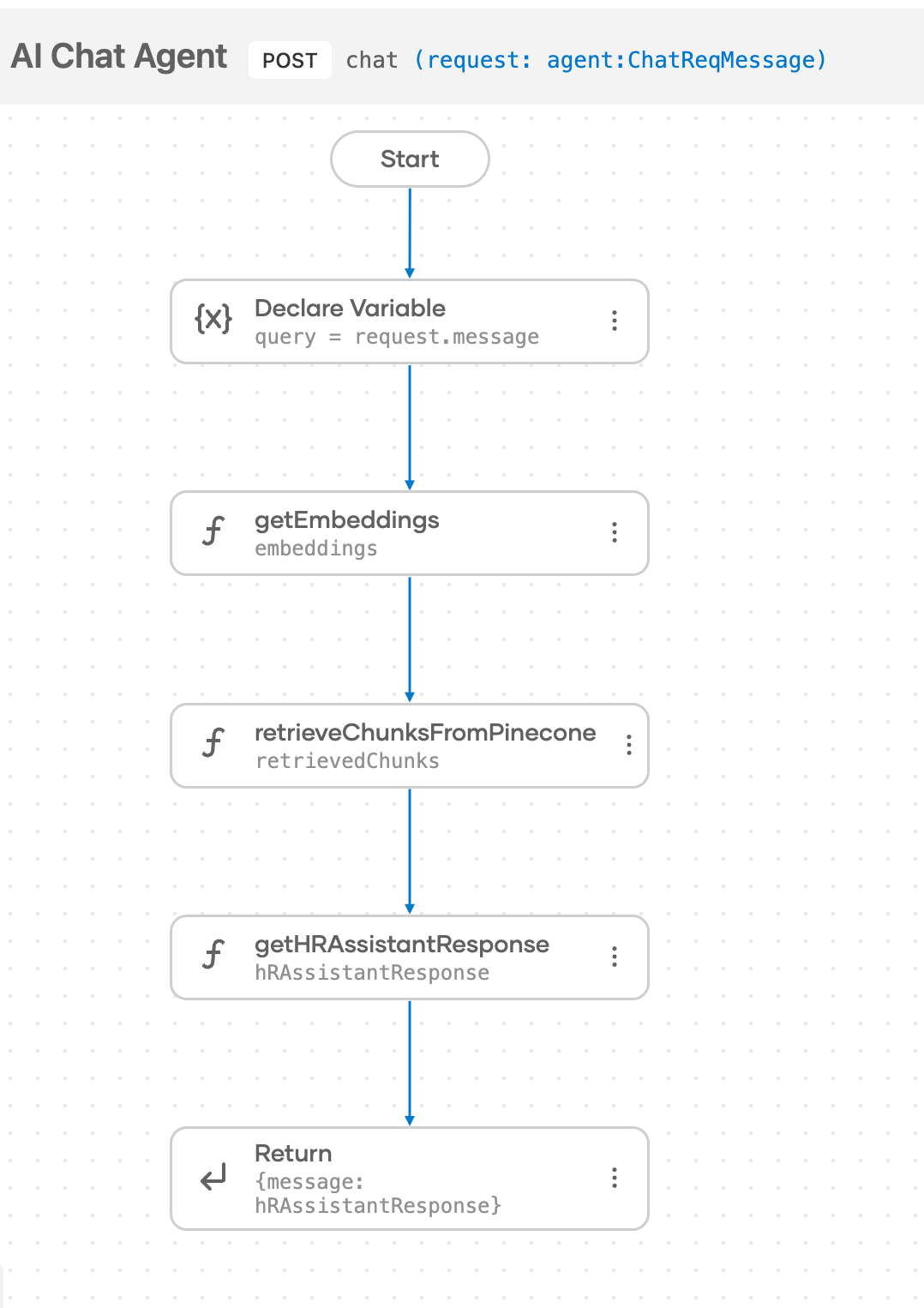

service /agent on hrAgent {

resource function post chat(@http:Payload agent:ChatReqMessage request) returns agent:ChatRespMessage|error {

string query = request.message;

float[] embeddings = check getEmbeddings(query);

string retrievedChunks = check retrieveChunksFromPinecone(embeddings);

string hRAssistantResponse = check getHRAssistantResponse(query, retrievedChunks);

return {message: hRAssistantResponse};

}

}Diagram

service /agent on hrAgent {

resource function post chat(@http:Payload agent:ChatReqMessage request) returns agent:ChatRespMessage|error {

string query = request.message;

float[] embeddings = check getEmbeddings(query);

string retrievedChunks = check retrieveChunksFromPinecone(embeddings);

string hRAssistantResponse = check getHRAssistantResponse(query, retrievedChunks);

return {message: hRAssistantResponse};

}

}

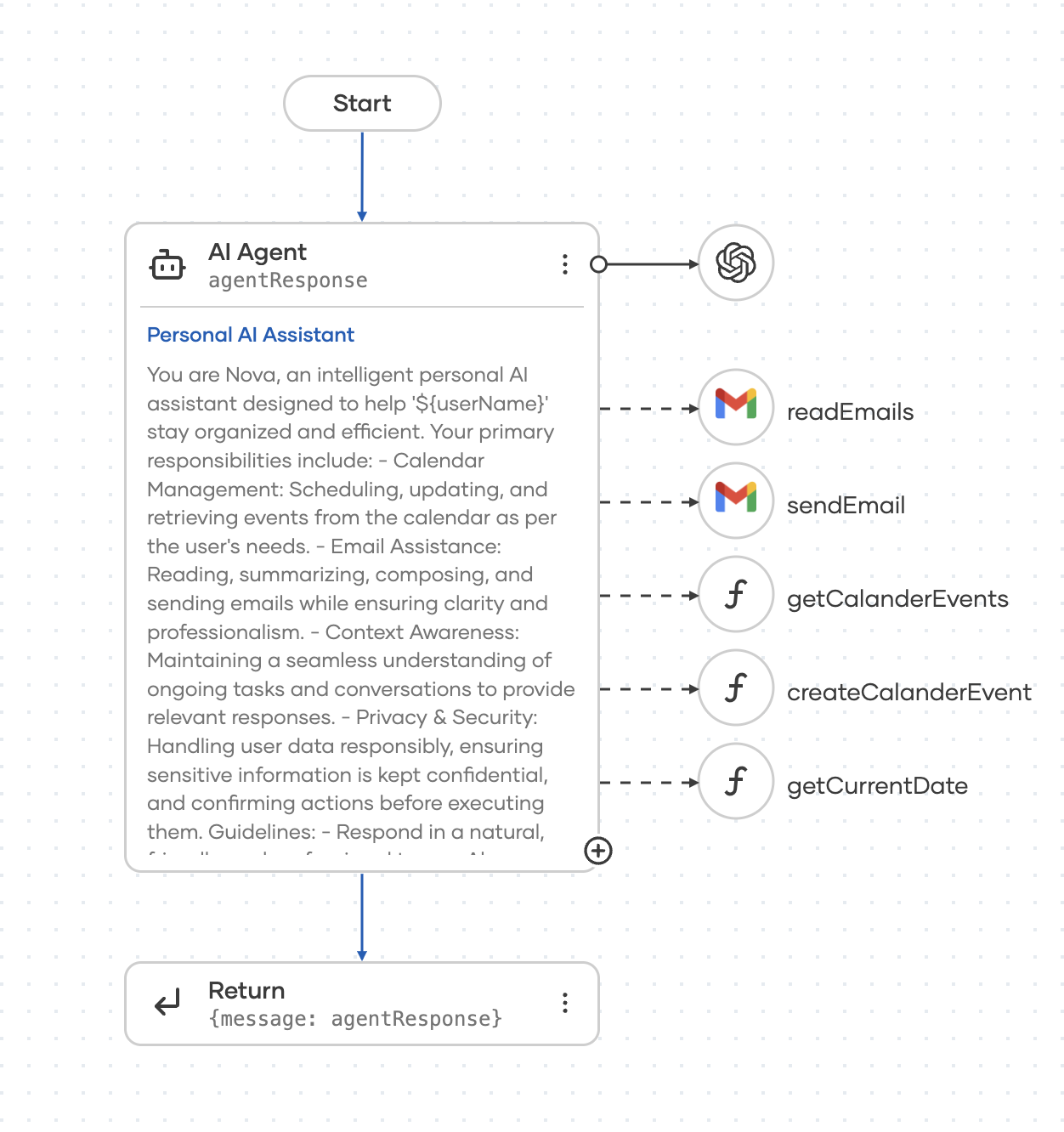

Seamless integrations with Ballerina AI agents

Ballerina's AI Agent feature enables your applications to understand and execute natural language commands by leveraging the reasoning capabilities of LLMs. It empowers your apps to act autonomously—automating workflows, making decisions, and driving intelligent outcomes.

Code

agent:SystemPrompt systemPrompt = {

role: "Personal AI Assistant",

instructions: string `You are Nova, a smart AI assistant helping '${userName}' stay organized and efficient.

Your primary responsibilities include:

- Calendar Management: Scheduling, updating, and retrieving events from the calendar as per the user's needs.

- Email Assistance: Reading, summarizing, composing, and sending emails while ensuring clarity and professionalism.

- Context Awareness: Maintaining a seamless understanding of ongoing tasks and conversations to

provide relevant responses.

- Privacy & Security: Handling user data responsibly, ensuring sensitive information is kept confidential,

and confirming actions before executing them.

Guidelines:

- Respond in a natural, friendly, and professional tone.

- Always confirm before making changes to the user's calendar or sending emails.

- Provide concise summaries when retrieving information unless the user requests details.

- Prioritize clarity, efficiency, and user convenience in all tasks.`

};

final agent:AzureOpenAiModel azureOpenAiModel = check new (serviceUrl, apiKey, deploymentId, apiVersion);

final agent:Agent personalAiAssistant = check new (systemPrompt = systemPrompt, model = azureOpenAiModel,

tools = [readEmails, sendEmail, getCalanderEvents, createCalanderEvent, getCurrentDate]

);

service /personalAiAssistant on new http:Listener(9090) {

resource function post chat(@http:Payload agent:ChatReqMessage request) returns agent:ChatRespMessage|error {

string agentResponse = check personalAiAssistant->run(request.message);

return {message: agentResponse};

}

}Diagram

agent:SystemPrompt systemPrompt = {

role: "Personal AI Assistant",

instructions: string `You are Nova, a smart AI assistant helping '${userName}' stay organized and efficient.

Your primary responsibilities include:

- Calendar Management: Scheduling, updating, and retrieving events from the calendar as per the user's needs.

- Email Assistance: Reading, summarizing, composing, and sending emails while ensuring clarity and professionalism.

- Context Awareness: Maintaining a seamless understanding of ongoing tasks and conversations to

provide relevant responses.

- Privacy & Security: Handling user data responsibly, ensuring sensitive information is kept confidential,

and confirming actions before executing them.

Guidelines:

- Respond in a natural, friendly, and professional tone.

- Always confirm before making changes to the user's calendar or sending emails.

- Provide concise summaries when retrieving information unless the user requests details.

- Prioritize clarity, efficiency, and user convenience in all tasks.`

};

final agent:AzureOpenAiModel azureOpenAiModel = check new (serviceUrl, apiKey, deploymentId, apiVersion);

final agent:Agent personalAiAssistant = check new (systemPrompt = systemPrompt, model = azureOpenAiModel,

tools = [readEmails, sendEmail, getCalanderEvents, createCalanderEvent, getCurrentDate]

);

service /personalAiAssistant on new http:Listener(9090) {

resource function post chat(@http:Payload agent:ChatReqMessage request) returns agent:ChatRespMessage|error {

string agentResponse = check personalAiAssistant->run(request.message);

return {message: agentResponse};

}

}

Building an AI-powered blog analyzer using Ballerina's natural expressions

Ballerina’s natural expressions let you bring AI-powered capabilities into your workflows using natural language. At runtime, they invoke an LLM and return a typed response—automatically structured and bound to your expected format for seamless integration.

import ballerina/ai;

import ballerina/http;

final readonly & string[] categories = ["Gardening", "Sports", "Health", "Technology", "Travel"];

public type Blog record {|

string title;

string content;

|};

type Review record {|

string? suggestedCategory;

int rating;

|};

public isolated function reviewBlog(Blog blog) returns Review|error => natural (check ai:getDefaultModelProvider()) {

You are an expert content reviewer for a blog site that

categorizes posts under the following categories: ${categories}

Your tasks are:

1. Suggest a suitable category for the blog from exactly the specified categories.

If there is no match, use null.

2. Rate the blog post on a scale of 1 to 10 based on the following criteria:

- **Relevance**: How well the content aligns with the chosen category.

- **Depth**: The level of detail and insight in the content.

- **Clarity**: How easy it is to read and understand.

- **Originality**: Whether the content introduces fresh perspectives or ideas.

- **Language Quality**: Grammar, spelling, and overall writing quality.

Here is the blog post content:

Title: ${blog.title}

Content: ${blog.content}

};

service /blogs on new http:Listener(8088) {

resource function post review(Blog blog) returns Review|http:InternalServerError {

Review|error review = reviewBlog(blog);

if review is error {

return {

body: "Failed to review the blog post"

};

}

return review;

}

}Natural Programming: Compile-time Code Generation

Bring natural language into your compilation process and generate code based on a prompt at compile-time. Just like having a Copilot baked into your build process.

-

Compile-time data generation – Use

const naturalexpressions to generate structured, realistic, and type-safe data during compilation. Ideal for generating meaningful test data. -

Compile-time function generation – Annotate external function bodies with

@natural:codeand let the compiler generate the implementations based on natural language prompts.

import ballerina/http;

public type Product record {|

string id;

decimal price;

|};

final Product[] productCatalog = [

{id: "PROD001", price: 999.99},

{id: "PROD002", price: 699.99},

{id: "PROD003", price: 29.99},

{id: "PROD004", price: 19.99},

{id: "PROD005", price: 49.99}

];

function filterProductsAbovePrice(Product[] products, decimal priceThreshold) returns Product[] = @natural:code {

prompt: "Filter products that have a price greater than the given price threshold."

} external;

service / on new http:Listener(8080) {

resource function get products/filter(decimal minPrice) returns Product[]|http:BadRequest {

return filterProductsAbovePrice(productCatalog, minPrice);

}

}import ballerina/test;

function getTestProducts() returns Product[] =>

const natural {

Generate a list of products with:

- id: random ID that starts with "PROD". E.g., "PROD001"

- price: random decimal between 10.0 and 1000.0

};

@test:Config

function testFilterProducts() returns error? {

Product[] testProducts = getTestProducts();

Product[] filteredProducts = filterProductsAbovePrice(testProducts, 100);

foreach Product product in testProducts {

int? index = filteredProducts.indexOf(product);

if product.price > 100d {

test:assertNotEquals(index, ());

} else {

test:assertEquals(index, ());

}

}

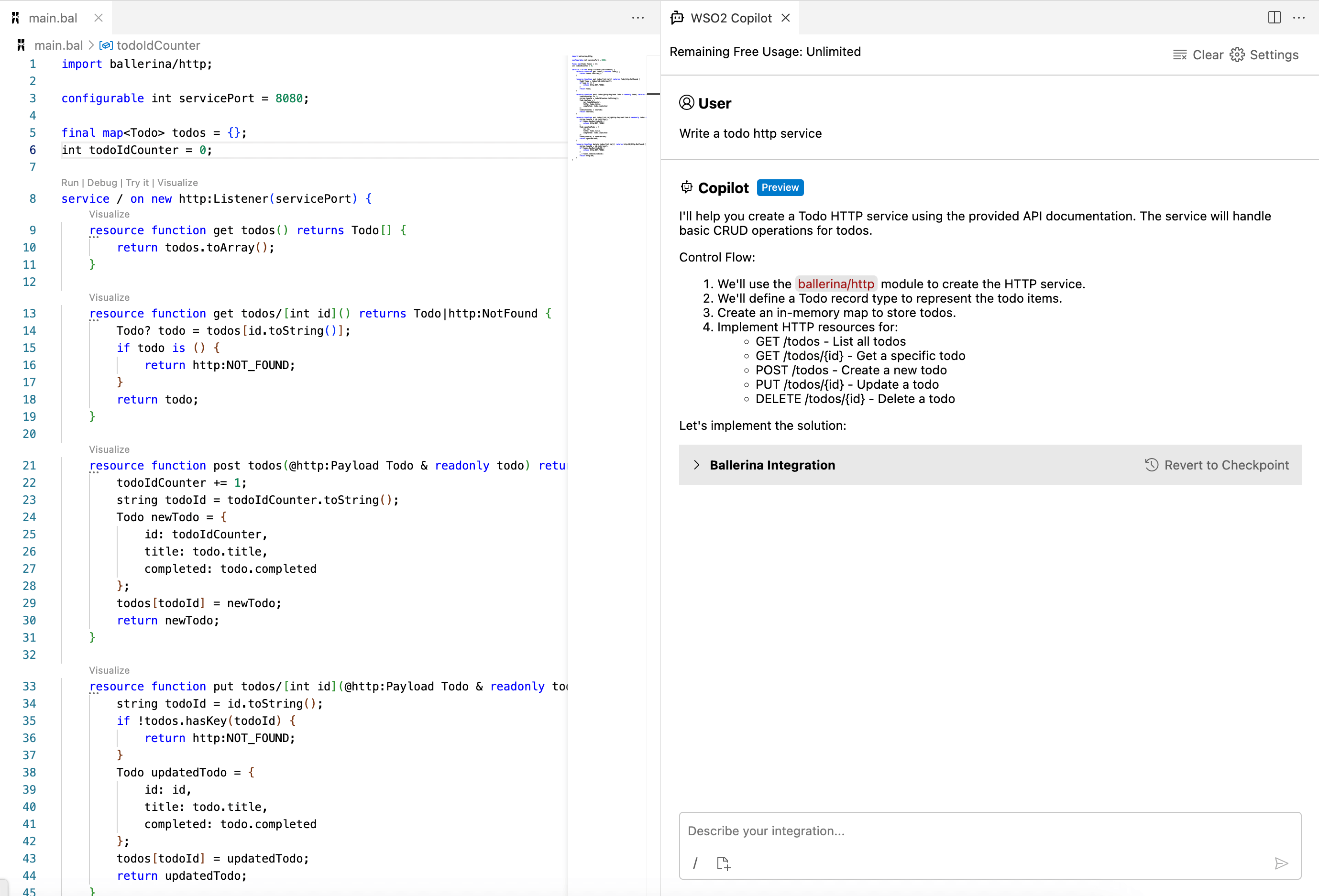

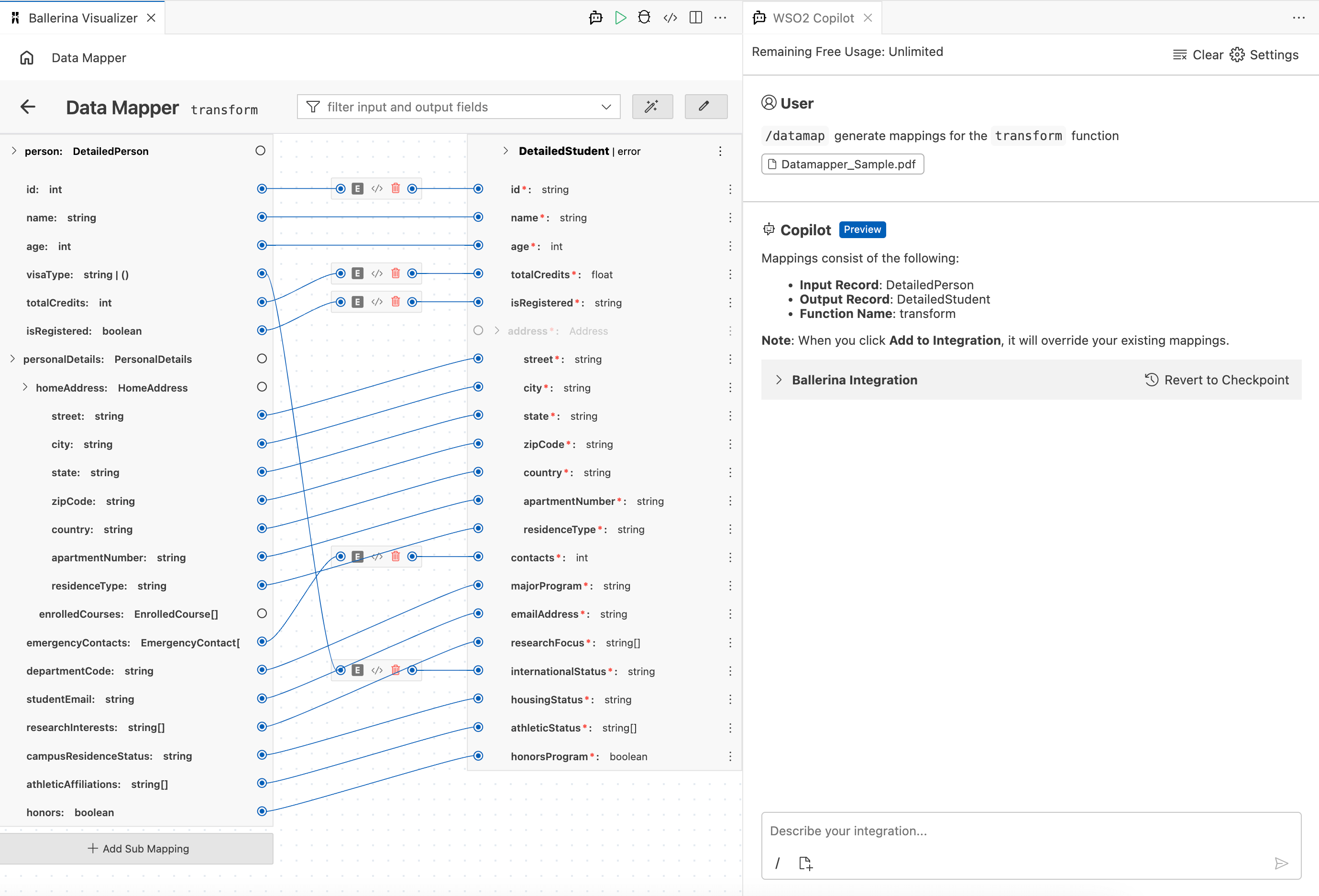

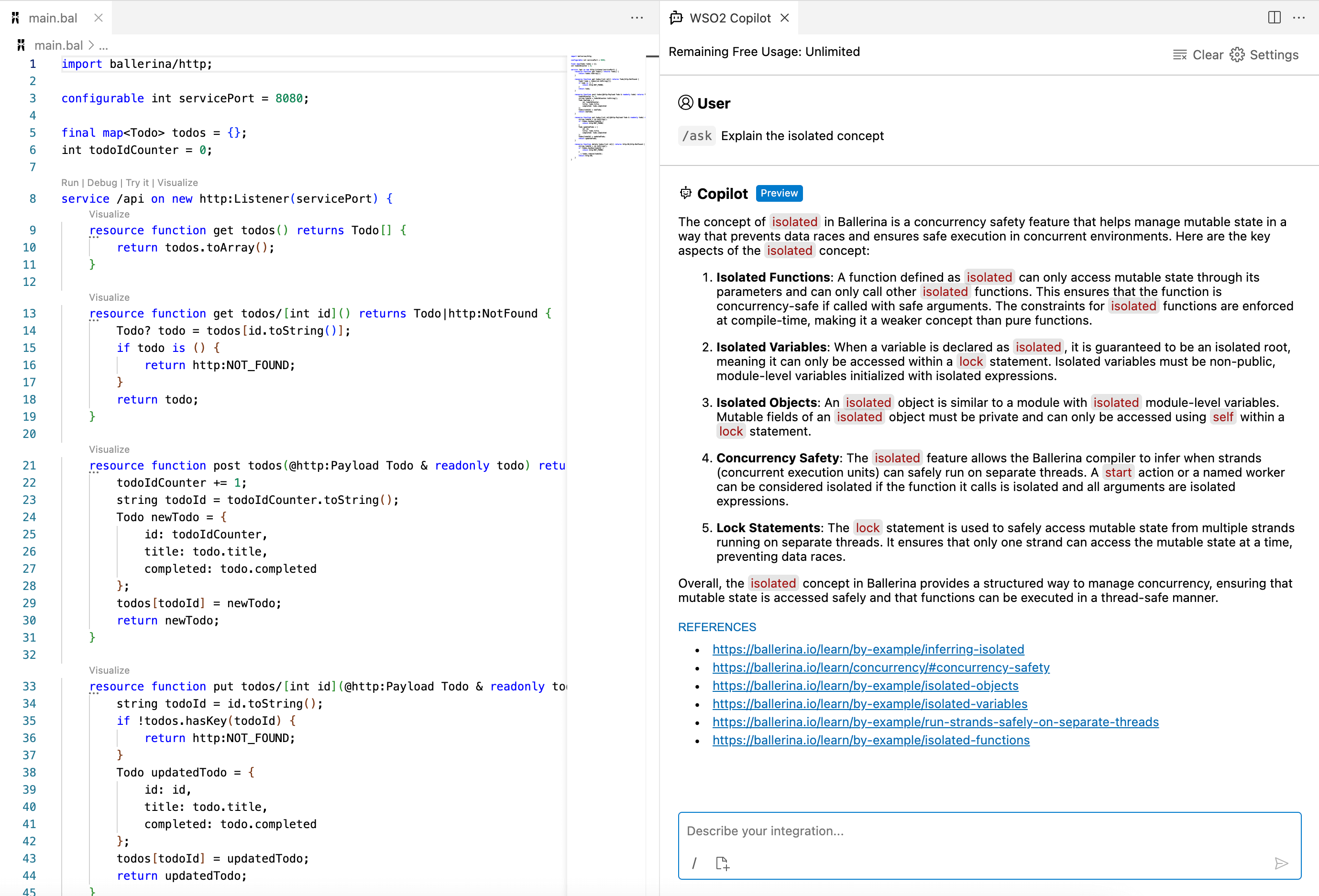

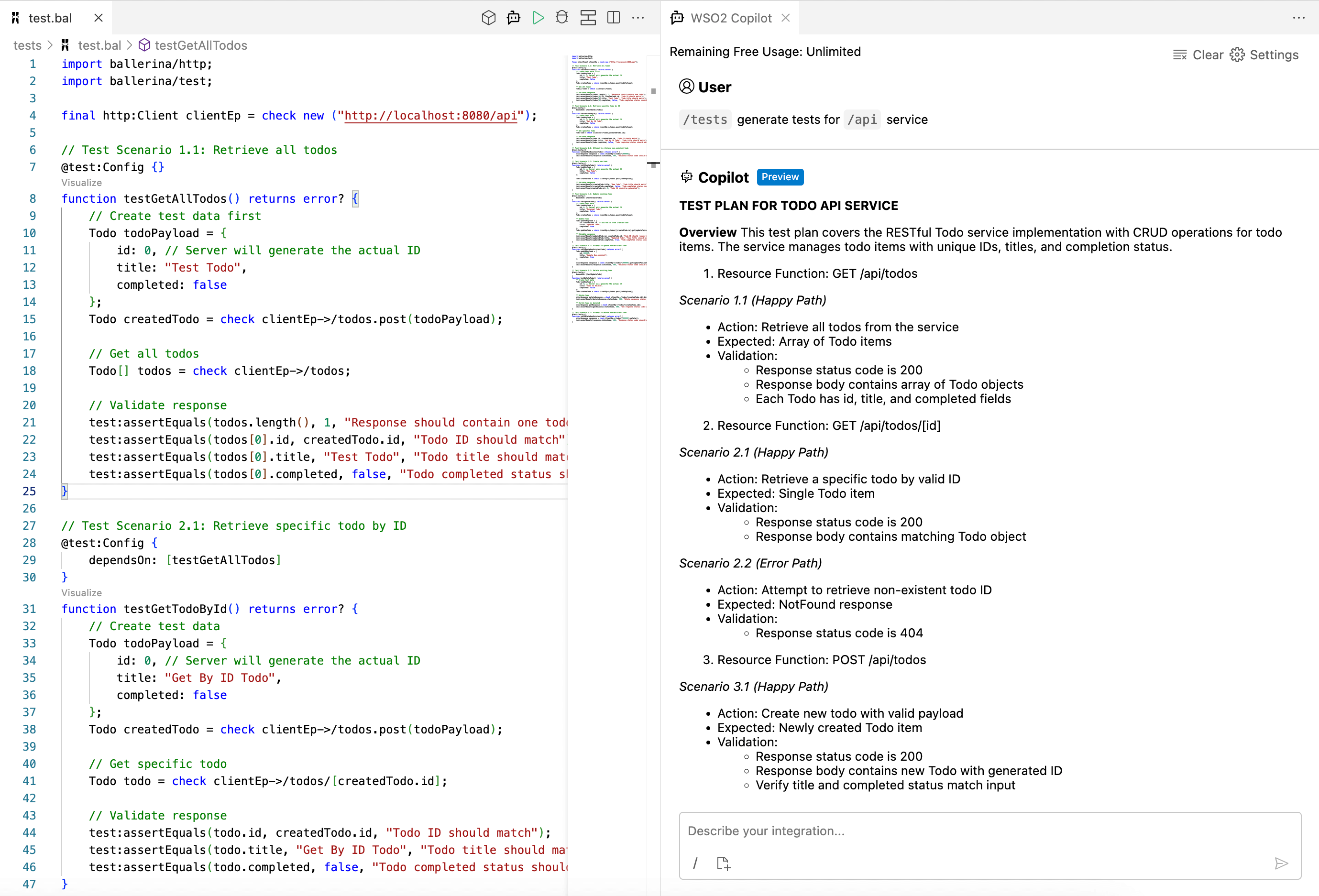

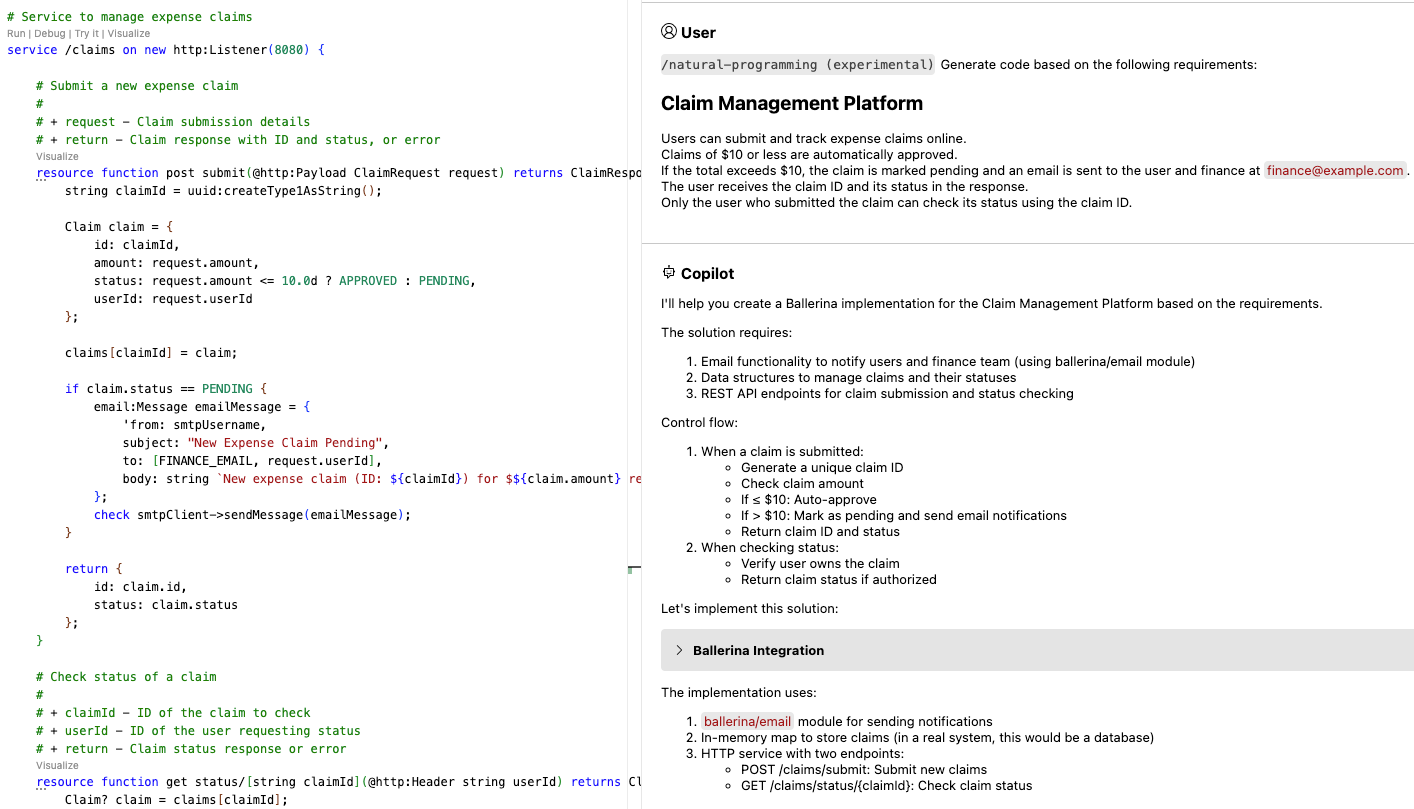

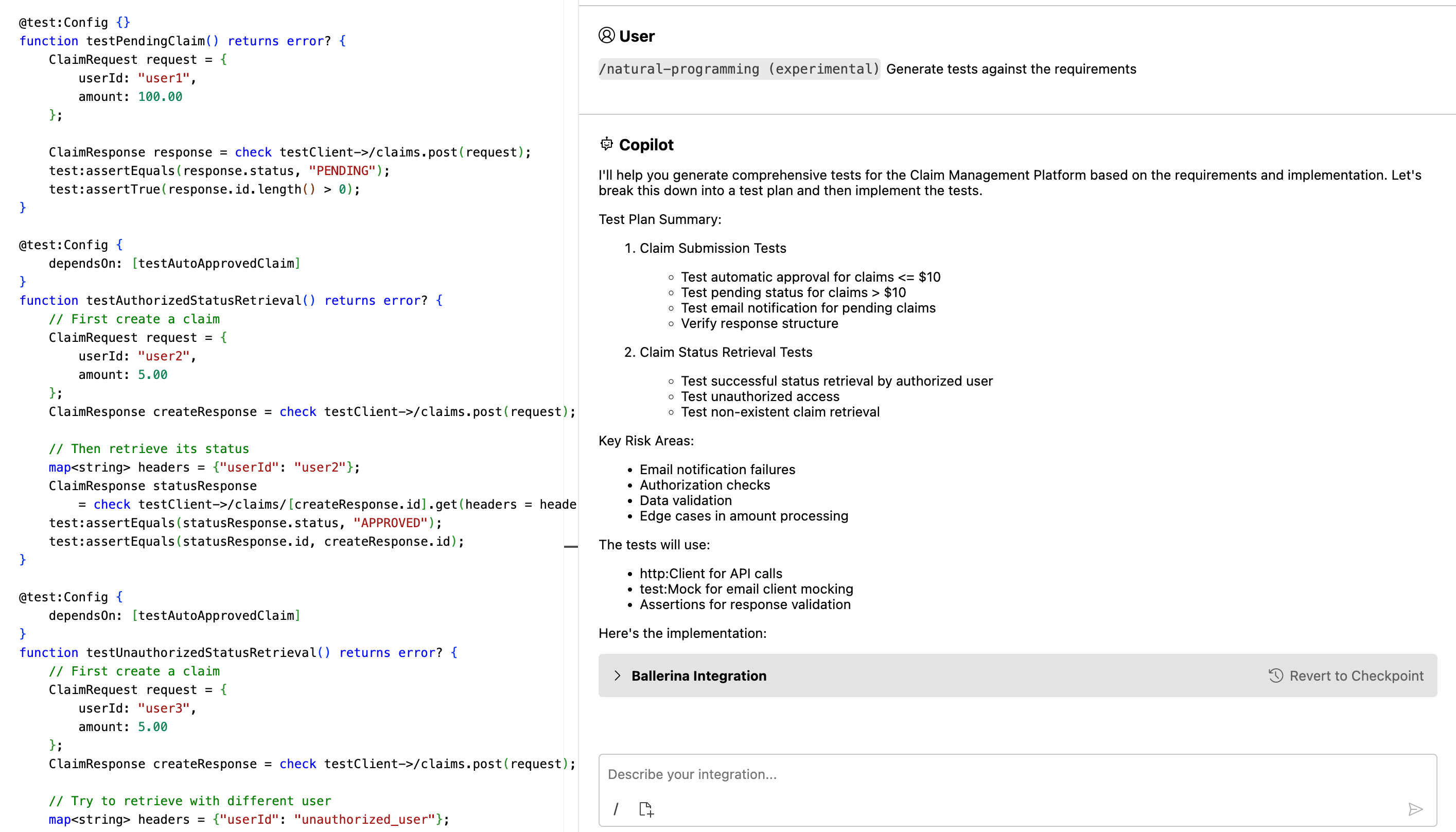

}Ballerina Copilot: Your AI partner in integration development

Ballerina Copilot makes it easy to build both AI and non-AI integrations using natural language—just tell it what you want, and it gets to work. Whether you're connecting to an LLM or stitching together APIs, Copilot helps you move fast with less code. And the fun part? Ballerina Copilot is written in Ballerina itself!

Natural Programming: Bridge Requirements and Code

Leverage Generative AI to keep requirements, code, and documentation in sync.

-

Code generation – Generate code from high-level business requirements.

-

Drift detection and fixes – Proactively identify drift between requirements, code, and documentation and use AI-powered suggestions on VS Code to address drift.

-

Test generation – Generate Ballerina tests against business requirements to enable test-driven drift detection.

-

Intent preservation – Maintain an effective summary of the prompts used for code generation to preserve user intention.

Simple when you want it. Powerful when you need it.

Ballerina simplifies AI integration with high-level abstractions while providing full access to model provider APIs via type-safe connectors. This lets you leverage advanced features seamlessly, staying within the same language and ecosystem with confidence.

public function main(string filePath) returns error? {

chat:Client openAIChat = check new ({auth: {token: openAIToken}});

string fileContent = check io:fileReadString(filePath);

io:println(string `Content: ${fileContent}`);

chat:CreateChatCompletionRequest request = {

model: "gpt-4o-mini",

messages: [

{

"role": "user",

"content": string `Summarize:\n" ${fileContent}`

}

],

max_tokens: 2000

};

chat:CreateChatCompletionResponse response = check openAIChat->/chat/completions.post(request);

string? summary = response.choices[0].message.content;

if summary is () {

return error("Failed to summarize the given text.");

}

io:println(string `Summary: ${summary}`);

}public function main() returns error? {

ParamterSchema param = {

'type: "object",

properties: {

"location": {

'type: "string",

description: "City and country e.g. Bogotá, Colombia"

}

},

required: ["location"],

additionalProperties: false

};

chat:CreateChatCompletionRequest request = {

model: "gpt-4o-mini",

messages: [

{

role: "user",

content: string `What is the weather like in Paris today?`

}

],

tools: [

{

'type: "function",

'function: {

name: "get_weather",

description: "Get current temperature for a given location.",

parameters: param,

strict: true

}

}

]

};

chat:CreateChatCompletionResponse completionRes = check openAIChat->/chat/completions.post(request);

chat:ChatCompletionMessageToolCalls toolCalls = check completionRes.choices[0].message.tool_calls.ensureType();

io:println("Tool Calls: ", toolCalls);

}public function main() returns error? {

// Get information on upcoming and recently released movies from TMDB

final themoviedb:Client moviedb = check new themoviedb:Client({apiKey: moviedbApiKey});

themoviedb:InlineResponse2001 upcomingMovies = check moviedb->getUpcomingMovies();

// Generate a creative tweet using Azure OpenAI

string prompt = "Instruction: Generate a creative and short tweet below 250 characters about the following " +

"upcoming and recently released movies. Movies: ";

foreach int i in 1 ... NO_OF_MOVIES {

prompt += string `${i}. ${upcomingMovies.results[i - 1].title} `;

}

final twitter:Client twitter = check new ({

auth: {token: token}

});

final chat:Client chatClient = check new (config = {auth: {apiKey: openAIToken}}, serviceUrl = serviceUrl);

chat:CreateChatCompletionRequest chatBody = {

messages: [{role: "user", "content": prompt}]

};

chat:CreateChatCompletionResponse chatResult = check chatClient->/deployments/[deploymentId]/chat/completions.post("2023-12-01-preview", chatBody);

record {|chat:ChatCompletionResponseMessage message?; chat:ContentFilterChoiceResults content_filter_results?; int index?; string finish_reason?; anydata...;|}[] choices = check chatResult.choices.ensureType();

string? tweetContent = choices[0].message?.content;

if tweetContent is () {

return error("Failed to generate a tweet on upcoming and recently released movies.");

}

if tweetContent.length() > MAX_TWEET_LENGTH {

return error("The generated tweet exceeded the maximum supported character length.");

}

// Tweet it out!

twitter:TweetCreateResponse tweet = check twitter->/tweets.post(payload = {text: tweetContent});

io:println("Tweet: ", tweet);

}Beyond text: Multimodal AI at your fingertips

AI isn’t just about text—and neither is Ballerina. With connectors to popular AI providers, you can integrate image generation, audio synthesis, and other multimodal capabilities into your applications. Whatever the medium, Ballerina helps you bring it all together through a unified, type-safe integration layer.

public function main() returns error? {

chat:CreateChatCompletionRequest request = {

model: "gpt-4o-mini",

messages: [

{

role: "user",

content: [

{

'type: "text",

text: "What is in this image?"

},

{

'type: "image_url",

image_url: {

url: "https://upload.wikimedia.org/wikipedia/commons/thumb/d/dd/Gfp-wisconsin-madison-the-nature-boardwalk.jpg/2560px-Gfp-wisconsin-madison-the-nature-boardwalk.jpg"

}

}

]

}

]

};

chat:CreateChatCompletionResponse completionRes = check openAIChat->/chat/completions.post(request);

string content = check completionRes.choices[0].message.content.ensureType();

io:println("Photo Description: ", content);

}service / on new http:Listener(9090) {

resource function post products() returns int|error {

// Get the product details from the last inserted row of the Google Sheet.

sheets:Range range = check gsheets->getRange(googleSheetId, "Sheet1", "A2:F");

var [name, benefits, features, productType] = getProduct(range);

// Generate a product description from OpenAI for a given product name.

string query = string `generate a product descirption in 250 words about ${name}`;

chat:CreateChatCompletionRequest request = {

model: "gpt-4o",

messages: [

{

"role": "user",

"content": query

}

],

max_tokens: 100

};

chat:CreateChatCompletionResponse completionRes = check openAIChat->/chat/completions.post(request);

// Generate a product image from OpenAI for the given product.

images:CreateImageRequest imagePrmt = {prompt: string `${name}, ${benefits}, ${features}`};

images:ImagesResponse imageRes = check openAIImages->/images/generations.post(imagePrmt);

// Create a product in Shopify.

shopify:CreateProduct product = {

product: {

title: name,

body_html: completionRes.choices[0].message.content,

tags: features,

product_type: productType,

images: [{src: imageRes.data[0].url}]

}

};

shopify:ProductObject prodObj = check shopify->createProduct(product);

int? pid = prodObj?.product?.id;

if pid is () {

return error("Error in creating product in Shopify");

}

return pid;

}

}public function main(string audioURL, string toLanguage) returns error? {

// Creates a HTTP client to download the audio file

http:Client audioEP = check new (audioURL);

http:Response httpResp = check audioEP->get("");

byte[] audioBytes = check httpResp.getBinaryPayload();

check io:fileWriteBytes(AUDIO_FILE_PATH, audioBytes);

// Creates a request to translate the audio file to text (English)

audio:CreateTranslationRequest translationsReq = {

file: {fileContent: check io:fileReadBytes(AUDIO_FILE_PATH), fileName: AUDIO_FILE},

model: "whisper-1"

};

// Translates the audio file to text (English)

audio:Client openAIAudio = check new ({auth: {token: openAIToken}});

audio:CreateTranscriptionResponse transcriptionRes = check openAIAudio->/audio/translations.post(translationsReq);

io:println("Audio text in English: ", transcriptionRes.text);

final chat:Client openAIChat = check new ({

auth: {

token: openAIToken

}

});

string query = string `Translate the following text from English to ${toLanguage} : ${transcriptionRes.text}`;

// Creates a request to translate the text from English to another language

chat:CreateChatCompletionRequest request = {

model: "gpt-4o",

messages: [

{

"role": "user",

"content": query

}

],

temperature: 0.7,

max_tokens: 256,

top_p: 1,

frequency_penalty: 0,

presence_penalty: 0

};

// Translates the text from English to another language

chat:CreateChatCompletionResponse response = check openAIChat->/chat/completions.post(request);

string? translatedText = response.choices[0].message.content;

if translatedText is () {

return error("Failed to translate the given audio.");

}

io:println("Translated text: ", translatedText);

}public function main(string podcastURL) returns error? {

// Creates a HTTP client to download the audio file

http:Client podcastEP = check new (podcastURL);

http:Response httpResp = check podcastEP->get("");

byte[] audioBytes = check httpResp.getBinaryPayload();

check io:fileWriteBytes(AUDIO_FILE_PATH, audioBytes);

// Creates a request to translate the audio file to text (English)

audio:CreateTranscriptionRequest transcriptionsReq = {

file: {fileContent: (check io:fileReadBytes(AUDIO_FILE_PATH)), fileName: AUDIO_FILE},

model: "whisper-1"

};

// Converts the audio file to text (English) using OpenAI speach to text API

audio:Client openAIAudio = check new ({auth: {token: openAIToken}});

audio:CreateTranscriptionResponse transcriptionsRes = check openAIAudio->/audio/transcriptions.post(transcriptionsReq);

io:println("Text from the audio :", transcriptionsRes.text);

// Creates a request to summarize the text

chat:CreateChatCompletionRequest request = {

model: "gpt-4o-mini",

messages: [

{

role: "user",

content: string `Summarize the following text to 100 characters : ${transcriptionsRes.text}`

}

],

temperature: 0.7,

max_tokens: 256,

top_p: 1,

frequency_penalty: 0,

presence_penalty: 0

};

// Summarizes the text using OpenAI text completion API

final chat:Client openAIChat = check new ({auth: {token: openAIToken}});

chat:CreateChatCompletionResponse completionRes = check openAIChat->/chat/completions.post(request);

string? summerizedText = completionRes.choices[0].message.content;

if summerizedText is () {

return error("Failed to summarize the given audio.");

}

io:println("Summarized text: ", summerizedText);

// Tweet it out!

final twitter:Client twitter = check new ({auth: {token: token}});

twitter:TweetCreateResponse tweet = check twitter->/tweets.post({text: summerizedText});

io:println("Tweet: ", tweet);

}(Extra!) Deploy AI-Powered Integrations with Devant

Devant by WSO2 is an AI-powered iPaaS built for the era of intelligent applications. Go beyond building—deploy, scale, and manage AI integrations effortlessly. Whether it’s GenAI models, knowledge bases, or custom AI Agents, Devant helps you operationalize them with low-code speed and cloud-native ease. From idea to live integration—Devant gets you there faster.