Ballerina for AI as a Service

AI is no longer only about training models but rather invoking APIs with AI available as a Service from OpenAI, Microsoft, Google, Facebook, and others.

Ballerina is the best language to write your AI-powered applications that consume LLMs and other generative models.

Why is Ballerina the way you should write AI applications?

For many years Python, a wonderful language, has been the de facto choice for data analytics, data science, and machine learning. But using LLMs to add AI to business applications is not about those problems anymore but more about prompt engineering, fine-tuning, calling APIs offered by hosted LLMs, chaining LLMs, and combining them with other APIs.

Ballerina is your best choice for writing modern cloud-native applications that incorporate LLM-powered AI!

Code

public function main(string audioURL, string translatingLanguage) returns error? {

// Creates a HTTP client to download the audio file

http:Client audioEP = check new (audioURL);

http:Response httpResp = check audioEP->/get();

byte[] audioBytes = check httpResp.getBinaryPayload();

check io:fileWriteBytes(AUDIO_FILE_PATH, audioBytes);

// Creates a request to translate the audio file to text (English)

audio:CreateTranslationRequest translationsReq = {

file: {fileContent: check io:fileReadBytes(AUDIO_FILE_PATH), fileName: AUDIO_FILE},

model: "whisper-1"

};

// Translates the audio file to text (English)

audio:Client openAIAudio = check new ({auth: {token: openAIKey}});

audio:CreateTranscriptionResponse transcriptionRes =

check openAIAudio->/audio/translations.post(translationsReq);

io:println("Audio text in English: ", transcriptionRes.text);

// Creates a request to translate the text from English to another language

text:CreateCompletionRequest completionReq = {

model: "text-davinci-003",

prompt: string `Translate the following text from English to ${

translatingLanguage} : ${transcriptionRes.text}`,

temperature: 0.7,

max_tokens: 256,

top_p: 1,

frequency_penalty: 0,

presence_penalty: 0

};

// Translates the text from English to another language

text:Client openAIText = check new ({auth: {token: openAIKey}});

text:CreateCompletionResponse completionRes =

check openAIText->/completions.post(completionReq);

string translatedText = check completionRes.choices[0].text.ensureType();

io:println("Translated text: ", translatedText);

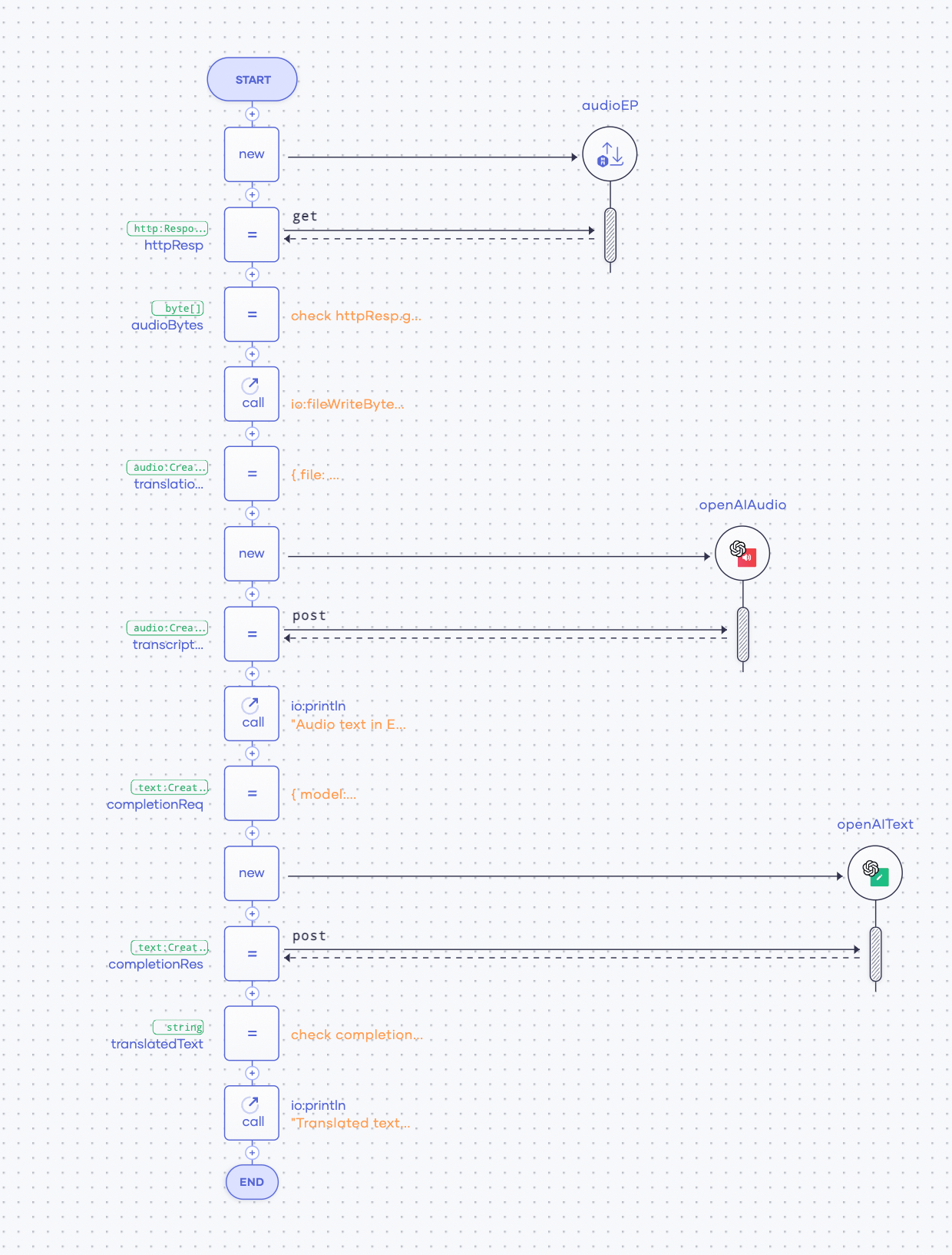

}Diagram

public function main(string audioURL, string translatingLanguage) returns error? {

// Creates a HTTP client to download the audio file

http:Client audioEP = check new (audioURL);

http:Response httpResp = check audioEP->/get();

byte[] audioBytes = check httpResp.getBinaryPayload();

check io:fileWriteBytes(AUDIO_FILE_PATH, audioBytes);

// Creates a request to translate the audio file to text (English)

audio:CreateTranslationRequest translationsReq = {

file: {fileContent: check io:fileReadBytes(AUDIO_FILE_PATH), fileName: AUDIO_FILE},

model: "whisper-1"

};

// Translates the audio file to text (English)

audio:Client openAIAudio = check new ({auth: {token: openAIKey}});

audio:CreateTranscriptionResponse transcriptionRes =

check openAIAudio->/audio/translations.post(translationsReq);

io:println("Audio text in English: ", transcriptionRes.text);

// Creates a request to translate the text from English to another language

text:CreateCompletionRequest completionReq = {

model: "text-davinci-003",

prompt: string `Translate the following text from English to ${

translatingLanguage} : ${transcriptionRes.text}`,

temperature: 0.7,

max_tokens: 256,

top_p: 1,

frequency_penalty: 0,

presence_penalty: 0

};

// Translates the text from English to another language

text:Client openAIText = check new ({auth: {token: openAIKey}});

text:CreateCompletionResponse completionRes =

check openAIText->/completions.post(completionReq);

string translatedText = check completionRes.choices[0].text.ensureType();

io:println("Translated text: ", translatedText);

}

Bring text alive with the chat, completions, edits, and moderation APIs of Azure and OpenAI

Azure and OpenAI’s text manipulation APIs allow you to bring text alive and program them easily. Ballerina connectors for these APIs give you type safe, structured ways to build applications quickly.

public function main(string filePath) returns error? {

text:Client openAIText = check new ({auth: {token: openAIToken}});

string fileContent = check io:fileReadString(filePath);

io:println(string `Content: ${fileContent}`);

text:CreateCompletionRequest textPrompt = {

prompt: string `Summarize:\n" ${fileContent}`,

model: "text-davinci-003",

max_tokens: 2000

};

text:CreateCompletionResponse completionRes =

check openAIText->/completions.post(textPrompt);

string? summary = completionRes.choices[0].text;

if summary is () {

return error("Failed to summarize the given text.");

}

io:println(string `Summary: ${summary}`);

}public function main(string filePath) returns error? {

http:RetryConfig retryConfig = {

interval: 5, // Initial retry interval in seconds.

count: 3, // Number of retry attempts before stopping.

backOffFactor: 2.0 // Multiplier of the retry interval.

};

final text:Client openAIText = check new ({auth: {token: openAIToken}, retryConfig});

text:CreateEditRequest editReq = {

input: check io:fileReadString(filePath),

instruction: "Fix grammar and spelling mistakes.",

model: "text-davinci-edit-001"

};

text:CreateEditResponse editRes = check openAIText->/edits.post(editReq);

string? text = editRes.choices[0].text;

if text is () {

return error("Failed to correct grammar and spelling in the given text.");

}

io:println(string `Corrected: ${text}`);

}public function main() returns error? {

// Get information on upcoming and recently released movies from TMDB

final themoviedb:Client moviedb = check new themoviedb:Client({apiKey: moviedbApiKey});

themoviedb:InlineResponse2001 upcomingMovies = check moviedb->getUpcomingMovies();

// Generate a creative tweet using Azure OpenAI

string prompt = "Instruction: Generate a creative and short tweet " +

"below 250 characters about the following " +

"upcoming and recently released movies. Movies: ";

foreach int i in 1 ... NO_OF_MOVIES {

prompt += string `${i}. ${upcomingMovies.results[i - 1].title} `;

}

text:Deploymentid_completions_body completionsBody = {

prompt,

max_tokens: MAX_TOKENS

};

final text:Client azureOpenAI = check new (

config = {auth: {apiKey: openAIToken}},

serviceUrl = serviceUrl

);

text:Inline_response_200 completion =

check azureOpenAI->/deployments/[deploymentId]/completions.post(

API_VERSION, completionsBody

);

string? tweetContent = completion.choices[0].text;

if tweetContent is () {

return error("Failed to generate a tweet on upcoming and recently released movies.");

}

if tweetContent.length() > MAX_TWEET_LENGTH {

return error("The generated tweet exceeded the maximum supported character length.");

}

// Tweet it out!

final twitter:Client twitter = check new (twitterConfig);

twitter:Tweet tweet = check twitter->tweet(tweetContent);

io:println("Tweet: ", tweet.text);

}Create images with DALL-E and Stable Diffusion

Stable Diffusion and OpenAI’s DALL-E image APIs generate or edit images with text based instructions. The power of the Ballerina library makes uploading, downloading and processing images a breeze.

public function main() returns error? {

sheets:Client gSheets = check new ({auth: {token: googleAccessToken}});

images:Client openAIImages = check new ({auth: {token: openAIToken}});

drive:Client gDrive = check new ({auth: {token: googleAccessToken}});

sheets:Column range = check gSheets->getColumn(sheetId, sheetName, "A");

foreach var cell in range.values {

string prompt = cell.toString();

images:CreateImageRequest imagePrompt = {

prompt,

response_format: "b64_json"

};

images:ImagesResponse imageRes =

check openAIImages->/images/generations.post(imagePrompt);

string? encodedImage = imageRes.data[0].b64_json;

if encodedImage is () {

return error(string `Failed to generate image for prompt: ${prompt}`);

}

// Decode the Base64 string and store image in Google Drive

byte[] imageBytes = check array:fromBase64(encodedImage);

_ = check gDrive->uploadFileUsingByteArray(imageBytes,

string `${cell}.png`, gDriveFolderId);

}

}service / on new http:Listener(9090) {

resource function post products() returns int|error {

// Get the product details from the last inserted row of the Google Sheet.

sheets:Range range = check gsheets->getRange(googleSheetId, "Sheet1", "A2:F");

var [name, benefits, features, productType] = getProduct(range);

// Generate a product description from OpenAI for a given product name.

text:CreateCompletionRequest textPrompt = {

prompt: string `generate a product descirption in 250 words about ${name}`,

model: "text-davinci-003",

max_tokens: 100

};

text:CreateCompletionResponse completionRes =

check openAIText->/completions.post(textPrompt);

// Generate a product image from OpenAI for the given product.

images:CreateImageRequest imagePrmt = {

prompt: string `${name},

${benefits},

${features}`

};

images:ImagesResponse imageRes =

check openAIImages->/images/generations.post(imagePrmt);

// Create a product in Shopify.

shopify:CreateProduct product = {

product: {

title: name,

body_html: completionRes.choices[0].text,

tags: features,

product_type: productType,

images: [{src: imageRes.data[0].url}]

}

};

shopify:ProductObject prodObj = check shopify->createProduct(product);

int? pid = prodObj?.product?.id;

if pid is () {

return error("Error in creating product in Shopify");

}

return pid;

}

}type GreetingDetails record {|

string occasion;

string recipientEmail;

string emailSubject;

string specialNotes?;

|};

service / on new http:Listener(8080) {

resource function post greetingCard(@http:Payload GreetingDetails req) returns error? {

string occasion = req.occasion;

string specialNotes = req.specialNotes ?: "";

fork {

// Generate greeting text and design in parallel

worker greetingWorker returns string|error? {

text:CreateCompletionRequest textPrompt = {

prompt: string `Generate a greeting for a/an ${

occasion}.${"\n"}Special notes: ${specialNotes}`,

model: "text-davinci-003",

max_tokens: 100

};

text:CreateCompletionResponse completionRes =

check openAIText->/completions.post(textPrompt);

return completionRes.choices[0].text;

}

worker imageWorker returns string|error? {

images:CreateImageRequest imagePrompt = {

prompt: string `Greeting card design for ${occasion}, ${

specialNotes}`

};

images:ImagesResponse imageRes =

check openAIImages->/images/generations.post(imagePrompt);

return imageRes.data[0].url;

}

}

record {|

string|error? greetingWorker;

string|error? imageWorker;

|} resutls = wait {greetingWorker, imageWorker};

string? greeting = check resutls.greetingWorker;

string? imageURL = check resutls.imageWorker;

if greeting !is string || imageURL !is string {

return error("Error while generating greeting card");

}

// Send an email with the greeting and the image using the email connector

gmail:MessageRequest messageRequest = {

recipient: req.recipientEmail,

subject: req.emailSubject,

messageBody: string `<p>${greeting}</p> <br/> <img src="${imageURL}">`,

contentType: gmail:TEXT_HTML

};

_ = check gmail->sendMessage(messageRequest, userId = "me");

}

}public function main(*EmailDetails emailDetails) returns error? {

fork {

worker poemWorker returns string|error? {

text:CreateCompletionRequest textPrompt = {

prompt: string `Generate a creative poem on the topic ${emailDetails.topic}.`,

model: "text-davinci-003",

max_tokens: 1000

};

text:CreateCompletionResponse completionRes =

check openAIText->/completions.post(textPrompt);

return completionRes.choices[0].text;

}

worker imageWorker returns byte[]|error {

stabilityai:TextToImageRequestBody payload =

{text_prompts: [{"text": emailDetails.topic, "weight": 1}]};

stabilityai:ImageRes listResult =

check stabilityAI->/v1/generation/stable\-diffusion\-v1/

text\-to\-image.post(payload);

string? imageBytesString = listResult.artifacts[0].'base64;

if imageBytesString is () {

return error("Image byte string is empty.");

}

byte[] imageBytes = imageBytesString.toBytes();

var decodedImage = check mime:base64Decode(imageBytes);

if decodedImage !is byte[] {

return error("Error in decoding the image byte string.");

}

return decodedImage;

}

}

record {|

string|error? poemWorker;

byte[]|error imageWorker;

|} results = wait {poemWorker, imageWorker};

string? poem = check results.poemWorker;

byte[]? image = check results.imageWorker;

if poem !is string || image !is byte[] {

return error("Error while generating the poem and the image.");

}

io:Error? fileWrite = io:fileWriteBytes("./image.png", image);

if fileWrite is io:Error {

return error("Error while writing the image to a file.");

}

string messageBody = poem.trim();

string:RegExp r = re `\n`;

messageBody = r.replaceAll(messageBody, "<br>");

gmail:MessageRequest messageRequest = {

recipient: emailDetails.recipientEmail,

subject: emailDetails.topic,

messageBody,

contentType: gmail:TEXT_HTML,

inlineImagePaths: [{imagePath: "./image.png", mimeType: "image/png"}]

};

_ = check gmail->sendMessage(messageRequest, userId = "me");

}Transcribe speech or music with Whisper

OpenAI’s Whisper API makes speech and music computable! Easily transcribe speech or music in any language into text. The power of the Ballerina library makes manipulating audio files and processing the results trivially simple.

public function main(string audioURL, string toLanguage) returns error? {

// Creates a HTTP client to download the audio file

http:Client audioEP = check new (audioURL);

http:Response httpResp = check audioEP->/get();

byte[] audioBytes = check httpResp.getBinaryPayload();

check io:fileWriteBytes(AUDIO_FILE_PATH, audioBytes);

// Creates a request to translate the audio file to text (English)

audio:CreateTranslationRequest translationsReq = {

file: {fileContent: check io:fileReadBytes(AUDIO_FILE_PATH), fileName: AUDIO_FILE},

model: "whisper-1"

};

// Translates the audio file to text (English)

audio:Client openAIAudio = check new ({auth: {token: openAIToken}});

audio:CreateTranscriptionResponse transcriptionRes =

check openAIAudio->/audio/translations.post(translationsReq);

io:println("Audio text in English: ", transcriptionRes.text);

// Creates a request to translate the text from English to another language

text:CreateCompletionRequest completionReq = {

model: "text-davinci-003",

prompt: string `Translate the following text from English to ${

toLanguage} : ${transcriptionRes.text}`,

temperature: 0.7,

max_tokens: 256,

top_p: 1,

frequency_penalty: 0,

presence_penalty: 0

};

// Translates the text from English to another language

text:Client openAIText = check new ({auth: {token: openAIToken}});

text:CreateCompletionResponse completionRes =

check openAIText->/completions.post(completionReq);

string? translatedText = completionRes.choices[0].text;

if translatedText is () {

return error("Failed to translate the given audio.");

}

io:println("Translated text: ", translatedText);

}public function main(string podcastURL) returns error? {

// Creates a HTTP client to download the audio file

http:Client podcastEP = check new (podcastURL);

http:Response httpResp = check podcastEP->/get();

byte[] audioBytes = check httpResp.getBinaryPayload();

check io:fileWriteBytes(AUDIO_FILE_PATH, audioBytes);

// Creates a request to translate the audio file to text (English)

audio:CreateTranscriptionRequest transcriptionsReq = {

file: {

fileContent: (check io:fileReadBytes(AUDIO_FILE_PATH)).slice(0, BINARY_LENGTH),

fileName: AUDIO_FILE

},

model: "whisper-1"

};

// Converts the audio file to text (English) using OpenAI speach to text API

audio:Client openAIAudio = check new ({auth: {token: openAIToken}});

audio:CreateTranscriptionResponse transcriptionsRes =

check openAIAudio->/audio/transcriptions.post(transcriptionsReq);

io:println("Text from the audio :", transcriptionsRes.text);

// Creates a request to summarize the text

text:CreateCompletionRequest textCompletionReq = {

model: "text-davinci-003",

prompt: string `Summarize the following text to 100 characters : ${

transcriptionsRes.text}`,

temperature: 0.7,

max_tokens: 256,

top_p: 1,

frequency_penalty: 0,

presence_penalty: 0

};

// Summarizes the text using OpenAI text completion API

text:Client openAIText = check new ({auth: {token: openAIToken}});

text:CreateCompletionResponse completionRes =

check openAIText->/completions.post(textCompletionReq);

string? summerizedText = completionRes.choices[0].text;

if summerizedText is () {

return error("Failed to summarize the given audio.");

}

io:println("Summarized text: ", summerizedText);

// Tweet it out!

twitter:Client twitter = check new (twitterConfig);

var tweet = check twitter->tweet(summerizedText);

io:println("Tweet: ", tweet);

}Fine-tune models with your data to create your own models

OpenAI’s fine tuning API lets you create a model that understands your world. Use Ballerina’s ability to easily integrate business APIs and systems to take your business data to fine-tune it and make that available for your business.

public function main() returns error? {

finetunes:Client openAIFineTunes = check new ({auth: {token: openAIToken}});

finetunes:CreateFileRequest fileRequest = {

file: {

fileContent: check io:fileReadBytes(TRAIN_DATA_FILE_PATH),

fileName: TRAIN_DATA_FILE_NAME

},

purpose: "fine-tune"

};

finetunes:OpenAIFile fileResponse = check openAIFineTunes->/files.post(fileRequest);

io:println(string `Training file uploaded successfully with ID: ${fileResponse.id}`);

finetunes:CreateFineTuneRequest fineTuneRequest = {

training_file: fileResponse.id,

model: "ada",

n_epochs: 4

};

finetunes:FineTune fineTuneResponse =

check openAIFineTunes->/fine\-tunes.post(fineTuneRequest);

io:println(string `Fine-tune job started successfully with ID: ${

fineTuneResponse.id}`);

}Simplify vector database management

Ballerina comes with built-in connectors for vector databases, which are a common building block in AI use cases that support the storage and retrieval of high-dimensional vectors.

service / on new http:Listener(8080) {

resource function get answer(string question) returns weaviate:JsonObject|error? {

// Retrieve OpenAI embeddings for the input question

embeddings:CreateEmbeddingResponse embeddingResponse = check openai->/embeddings.post({

model: MODEL,

input: question

}

);

float[] vector = embeddingResponse.data[0].embedding;

// Querying Weaviate for the closest vector using GraphQL

string graphQLQuery = string `{

Get {

${CLASS_NAME} (

nearVector: {

vector: ${vector.toString()}

}

limit: 1

){

question

answer

_additional {

certainty,

id

}

}

}

}`;

weaviate:GraphQLResponse results = check weaviate->/graphql.post({query: graphQLQuery});

return results.data;

}

}Libraries for AI operations

Built-in mathematical operations such as distance measures, optimizations, etc. are a common building block in AI use cases.

public function main() returns error? {

final embeddings:Client openAIEmbeddings = check new ({auth: {token: openAIToken}});

string text1 = "What are you thinking?";

string text2 = "What is on your mind?";

embeddings:CreateEmbeddingRequest embeddingReq = {

model: "text-embedding-ada-002",

input: [text1, text2]

};

embeddings:CreateEmbeddingResponse embeddingRes =

check openAIEmbeddings->/embeddings.post(embeddingReq);

float[] text1Embedding = embeddingRes.data[0].embedding;

float[] text2Embedding = embeddingRes.data[1].embedding;

float similarity = vector:cosineSimilarity(text1Embedding, text2Embedding);

io:println("The similarity between the given two texts : ", similarity);

}Effortlessly create impactful business use cases

Take advantage of Ballerina's OpenAI, Azure, and VectorDB connectors to craft powerful solutions like Slack bots and Q&A bots that enhance customer engagement and optimize business operations.

service /slack on new http:Listener(8080) {

map<ChatMessage[]> chatHistory = {};

resource function post events(http:Request request) returns Response|error {

if !check verifyRequest(request) {

return error("Request verification failed");

}

map<string> params = check request.getFormParams();

string? channelName = params["channel_name"];

string? requestText = params["text"];

if channelName is () || requestText is () {

return error("Invalid values in the request parameters for channel_name or text");

}

ChatMessage[] history = self.chatHistory[channelName] ?:

[{

role: SYSTEM,

content: "You are an AI slack bot to assist with user questions."

}];

history.push({role: USER, content: requestText});

chat:Inline_response_200 completion =

check azureOpenAI->/deployments/[deploymentId]/chat/completions.post(

API_VERSION, {messages: history}

);

chat:Inline_response_200_message? response = completion.choices[0].message;

string? responseText = response?.content;

if response is () || responseText is () {

return error("Error in response generation");

}

history.push({role: ASSISTANT, content: response.content});

// Limit history to 25 messages to preserve token limit.

if history.length() > MAX_MESSAGES {

history = history.slice(history.length() - MAX_MESSAGES);

}

self.chatHistory[channelName] = history;

return {response_type: "in_channel", text: responseText};

}

}service / on new http:Listener(8080) {

function init() returns error? {

sheets:Range range = check gSheets->getRange(sheetId, sheetName, "A2:B");

pinecone:Vector[] vectors = [];

foreach any[] row in range.values {

string title = <string>row[0];

string content = <string>row[1];

float[] vector = check getEmbedding(string `${title} ${"\n"} ${content}`);

vectors[vectors.length()] =

{id: title, values: vector, metadata: {"content": content}};

}

pinecone:UpsertResponse response =

check pineconeClient->/vectors/upsert.post({vectors, namespace: NAMESPACE});

if response.upsertedCount != range.values.length() {

return error("Failed to insert embedding vectors to pinecone.");

}

io:println("Successfully inserted embedding vectors to pinecone.");

}

resource function get answer(string question) returns string?|error {

string prompt = check constructPrompt(question);

text:CreateCompletionRequest prmt = {

prompt: prompt,

model: "text-davinci-003",

max_tokens: 2000

};

text:CreateCompletionResponse completionRes = check openAIText->/completions.post(prmt);

return completionRes.choices[0].text;

}

}Create AI-powered APIs, automations, and event handlers

Effortlessly tackle any AI-powered API integration by leveraging the network abstractions of Ballerina to create APIs, automations, and event handlers for your applications.

service / on new http:Listener(8080) {

map<string> documents = {};

map<float[]> docEmbeddings = {};

function init() returns error? {

sheets:Range range = check gSheets->getRange(sheetId, sheetName, "A2:B");

//Populate the dictionaries with the content and embeddings for each doc.

foreach any[] row in range.values {

string title = <string>row[0];

string content = <string>row[1];

self.documents[title] = content;

self.docEmbeddings[title] = check getEmbedding(string `${title} ${"\n"} ${

content}`);

}

}

resource function get answer(string question) returns string?|error {

string prompt = check constructPrompt(question, self.documents,

self.docEmbeddings);

text:CreateCompletionRequest prmt = {

prompt: prompt,

model: "text-davinci-003"

};

text:CreateCompletionResponse completionRes =

check openAIText->/completions.post(prmt);

return completionRes.choices[0].text;

}

}Write robust API-powered AI applications

Take on the unpredictable world of distributed systems with the built-in language features and Ballerina library features. Writing robust API-powered AI applications is now a breeze for every developer, no matter the challenges!

public function main(string filePath) returns error? {

http:RetryConfig retryConfig = {

interval: 5, // Initial retry interval in seconds.

count: 3, // Number of retry attempts before stopping.

backOffFactor: 2.0 // Multiplier of the retry interval.

};

final text:Client openAIText = check new ({auth: {token: openAIToken}, retryConfig});

text:CreateEditRequest editReq = {

input: check io:fileReadString(filePath),

instruction: "Fix grammar and spelling mistakes.",

model: "text-davinci-edit-001"

};

text:CreateEditResponse editRes = check openAIText->/edits.post(editReq);

string text = check editRes.choices[0].text.ensureType();

io:println(string `Corrected: ${text}`);

}Concurrency simplified for AI development

Ballerina's concurrency model is ideal for writing API-powered AI applications. Its sequence diagrams and concurrency control capabilities make it easy to manage and visualize complex operations leading to more efficient and reliable AI solutions.

fork {

// Generate greeting text and design in parallel

worker greetingWorker returns string|error? {

string prompt =

string `Generate a greeting for a/an ${occasion}

.${"\n"}Special notes: ${specialNotes}`;

text:CreateCompletionRequest textPrompt = {

prompt,

model: "text-davinci-003",

max_tokens: 100

};

text:CreateCompletionResponse completionRes =

check openaiText->/completions.post(textPrompt);

return completionRes.choices[0].text;

}

worker imageWorker returns string|error? {

string prompt = string `Greeting card design for ${occasion}, ${specialNotes}`;

images:CreateImageRequest imagePrompt = {

prompt

};

images:ImagesResponse imageRes =

check openaiImages->/images/generations.post(imagePrompt);

return imageRes.data[0].url;

}

}(Extra!) Trivial hosting in WSO2 Choreo iPaaS

Manual integrations? Scheduled integrations (cron jobs)? Triggered integrations? Integrations as APIs? No problem! Write the code, attach the repo to WSO2 Choreo, and let it do the rest.